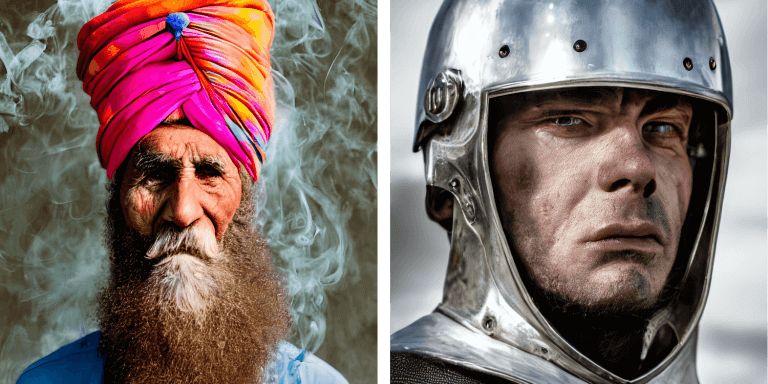

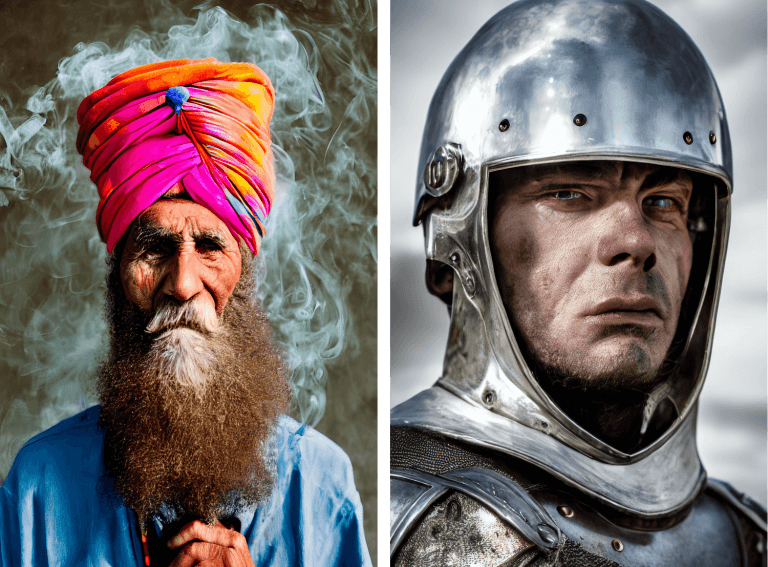

Striking artistic representations can be created in seconds from textual descriptions

We are experiencing a fascinating evolution regarding Artificial Intelligence capabilities: the ability to generate spectacular images based on simple textual descriptions. Will it be a total shift in the way people make art? That's what this article wants to discuss from now on.

Few words turned into art

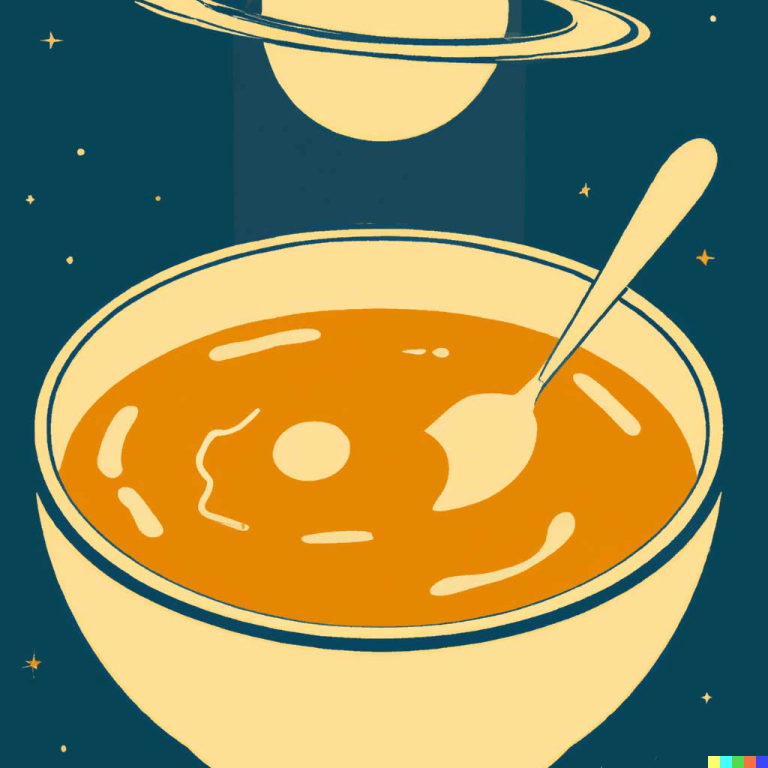

A bowl of soup like a planet in the universe stylized as a poster from the 1960s. A disjointed phrase, apparently. However, in a world where technological advances arise and evolve at impressive speeds, a sentence like this can result in art in the same time it took you to say those words.

This is possible thanks to the new apple of the eye in the area of Artificial Intelligence: the creation of images, realistic or artistic and unpublished, from phrases that we can utter naturally. A bowl of soup as a planet in the universe stylized as a 1960s poster, for example. The result, creation of the DALL-E 2 system, you can see below.

THE DALL-E 2

DALL-E sounds just like the name of an artist famous, both for his works and for his mustache: himself, Salvador Dalí i Domènech, 1st Marquis of Dalí of Púbol. Dalí, for everyone who knows his art.

DALL-E 2 is the brainchild of research and development company OpenAI, whose stated mission is “to ensure that artificial general intelligence benefits all of humanity”. By “artificial general intelligence”, they mean “highly autonomous systems that outperform humans at most economically valuable work”, in the words of the company, which has Microsoft as one of its investors.

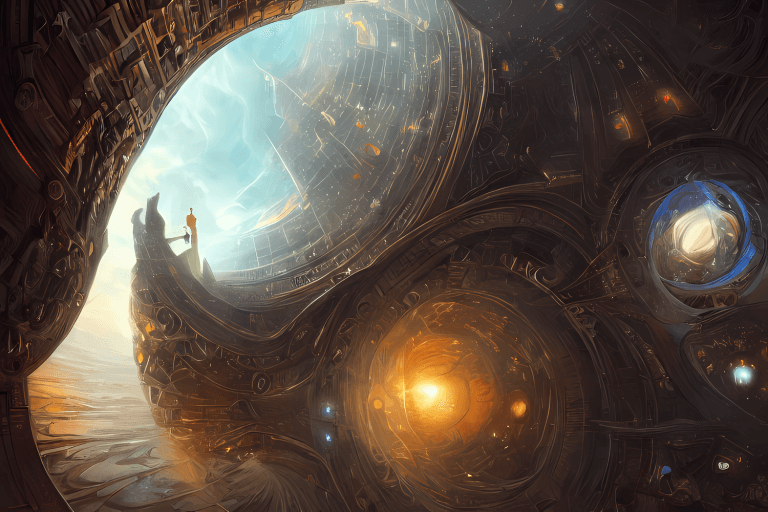

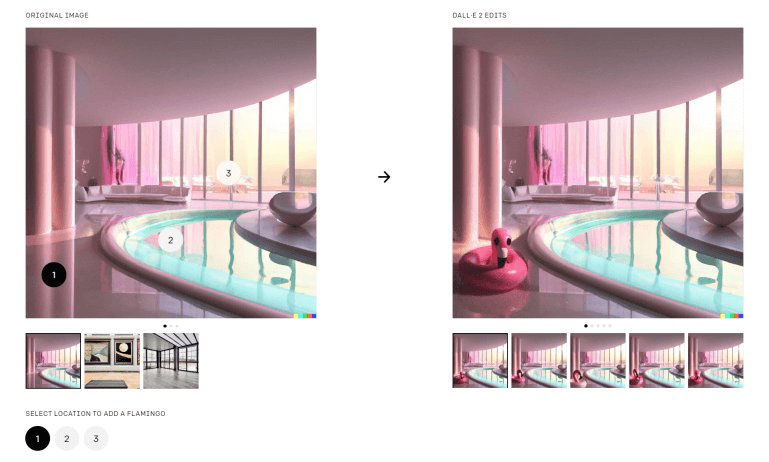

The first generation, DALL-E, was introduced in January 2021. The second generation, revealed a year later, is improved with higher resolution, better understanding and new capabilities. Not just creating images, DALL-E 2's capabilities include editing existing works. For example, changing or expanding the background and adding or removing elements – always taking into account details such as shadows, reflections and textures.

DALL-E 2 knows how to establish relationships between individual elements. Explaining: in addition to knowing how to identify the image of a koala and the image of a motorcycle, the technology knows how to relate the two and thus position a koala riding a motorcycle. This is how it can understand when someone describes a koala riding a motorcycle using words.

The creators of DALL-E 2 point to three main positive results of the technology. The first is its originality, as it unleashes people's creativity to a level that has not been observed until now. The second is the greater understanding we can have about artificial intelligence and machine learning from the generated images. That is, by describing an image and observing the results, we can analyze whether the system really understands what it is told or if it just reproduces what it was taught.

The example given by the creators of the DALL-E 2 is the expression tree bark, that is, the outer covering of the trunk, branches, and roots of trees and other woody plants. It is expected that DALLE-2, when receiving the description tree bark, reproduces the correct image instead of a dog barking at a tree, for example.

The third positive result of DALL-E 2 is that it helps humans understand how artificial intelligence systems see and understand our world, which is essential for such systems to be secure.

DALL-E 2, like all AI systems, is like students: they learn from the information given to them. If a child is taught that the word car corresponds to the image of an airplane, the child will establish the wrong relationship between the two. The same can happen with the DALL-E 2.

The system also has limitations on language word plays. For example, the howler monkey. If the system is not taught correctly that there is a species by that name, when receiving the textual description howler monkey, the image created will not represent such species correctly, but will represent any species of monkey performing the action of howling.

DALL-E 2 also has intentional limitations on the part of its development team. For example, violent, hateful or adult content is limited among the platform's training data. In this way, the system's ability to generate such images is reduced. System filters are in place to identify expressions and images that violate these policies.

The system is also not able to generate faces of real people – something important in times of deepfake, that is, videos with which real people are apparently talking about something, however, the content is manipulated, literally putting words in the mouth of the person without him or her actually having said them.

Currently, DALL-E is in beta stage since July 2022, to a larger number of people compared to its launch. The platform is free, but with a limitation on the number of images that can be made at no cost. It can be accessed at this link.

The Stable Diffusion

Stable Diffusion is the name of another image generation initiative from textual descriptions. The company responsible is Stability AI, focused on developing AI models for image, language, audio, video, 3D and biology.

Those responsible for Stable Diffusion highlight the democratic nature of the tool, since it doesn't need more than 10 GB of VRAM memory to generate images of 512x512 pixels in a few seconds.

Stable Diffusion has several components. One of them is responsible for understanding the textual description. That is, each word of the text is transformed into a token, a kind of identifier number generated by a transforming model of language. The number is sent to another component, the Image Generator, which processes the information in order to obtain a high-quality result. This information is sent to the Image Decoder, which uses it to produce the final artwork.

The result is displayed in seconds, but such agility hides a complex process that takes place in a step-by-step fashion, with each step adding relevant information for the construction of the final result.

A key component here is the Image Information Creator. In addition to tokens, this creator uses arrays of initial and random visual information, also called latents. Within the context of this technology, latents are sets of information that generate visual noise, the name given to any random visual stimulus. This is the starting point for the composition of the image.

Each process takes place in an array that generates another array, increasingly in accordance with the textual description that generated this process. At each step, the array gains new information from the materials with which the technology was trained, and the latents are transformed into detailed images and according to the textual description.

In other words, think like a TV channel being tuned in. At first, the image may be nothing more than drizzles. However, at each step, the drizzles gain information and are transformed into a clear and detailed image.

Stable Diffusion has its source code available. The license also has usage limitations and does not allow the use of the tool for committing crimes, defamation, harassment, improper exploitation, giving medical advice, automatically creating legal obligations, producing legal evidence, etc. The user owns the rights to the generated images and is free to use them commercially. Click here to access the platform.

The Diffusion

Briefly, diffusion is the name given to the process of interpreting texts in high quality images. The initial, random visual noise starts out as nothing more than that: a visual stimulus. However, at each processing step, this noise gains more and more information until it becomes the final detailed image that is consistent with the textual description.

The fill that takes the final result from a simple noise to a detailed composition will be based on the training of the technology, on how it was supplied with visual information and its respective textual correspondences.

In the cases of the two platforms in question, both use CLIP technology, composed of image and text encoders and trained with 400 million images and their respective descriptions. Specifically, Stable Diffusion was trained with a database focused on generating aesthetically pleasing images, so images produced with this technology tend to be aesthetically pleasing.

Diffusion is used by both Stable Diffusion and DALLE-2, as well as other textual interpretation technologies in images. But Stable Diffusion does something different: the diffusion process takes place in a compressed version of the image, which speeds up the process.

But wait, there’s more

Believe it: there is already technology that creates videos from textual descriptions, going beyond the generation of still images. Recently, Meta, responsible for Facebook, Instagram and WhatsApp, among other technological ventures, presented Make-A-Video, an artificial intelligence system that creates short video clips from texts. It is a demonstration of the creative capacity achieved by Meta's research team, and the promise is to open up new opportunities for creators and artists.

According to Meta, “The system learns what the world looks like from paired text-image data and how the world moves from video footage with no associated text. With just a few words or lines of text, Make-A-Video can bring imagination to life and create one-of-a-kind videos full of vivid colors, characters, and landscapes. The system can also create videos from images or take existing videos and create new ones that are similar”.

Research on Make-A-Video will continue, and Meta promises to be transparent about the results. Click here to access the platform and learn more.

Visionary Technology

We at Visionnaire are obsessed with incipient and promising technologies such as generating images from textual descriptions. It is with great excitement that we are experiencing yet another revolution provided by Artificial Intelligence and Machine Learning.

We are part of one of the most dynamic industries in the world, if not the most dynamic, that is software development, which is renewed every day with fascinating novelties such as the creation of unprecedented and detailed images in seconds.

Our mission is to constantly renew our way of developing software, with new perspectives on solutions and business model. And we’re certainly thinking of ways to make good use of tools like Stable Diffusion and DALL-E 2. After all, being a visionary is not only in our name, but also in our essence.

Certainly, we may be facing a total transformation in the way art is produced. At this moment, like every incipient technology, the transformation of texts into images is starting to democratize. This means that it is not yet a technology available to the masses. In this sense, Stable Diffusion plays an important role, as it is more accessible than the competition.

Another important characteristic of incipient technologies is their ethical and moral use. Both Stable Diffusion and DALLE-2 take steps to ensure that the technologies are not used in a harmful way. And the level of control over the security of a new technology is also something that determines its success or failure.

Also, as with any fledgling technology, there are mixed reactions. The most purists will not consider ready-made images as art; the most avant-garde will not think twice about incorporating technologies into their way of artistic creation.

The debate is open. From questions about what art is (if it can be defined easily) to doubts about how technology has impacted the production of art. Recent technologies like blockchain and NFT have certainly changed the way art is disseminated and commercialized. Now, paradigms about art production may be about to change forever.